Introduction to Keras :

Keras is a high-level neural networks API, capable of running on top of Tensorflow, Theano, and CNTK. It enables fast experimentation through a high level, user-friendly, modular and extensible API. Keras can also be run on both CPU and GPU.

Keras was developed and is maintained by Francois Chollet and is part of the Tensorflow core, which makes it Tensorflows preferred high-level API.

In this article, we will go over the basics of Keras including the two most used Keras models (Sequential and Functional), the core layers as well as some preprocessing functionalities.

Downloading keras in ubuntu(linux):

First we will show how to download it. All the guidance of download is for ubuntu(linux) command line. For windows downloading of keras, follow here.You need tensorflow backend to run Keras. So, first in ubuntu 18.04, go to command, write bash.

Then bash line with $ will open.

Now, write pip3 install tensorflow

It is gonna take some time as the file is quite big.

Then once that is done; write pip3 install keras. This will download keras and now you have the backend in tensorflow ready too.

Starting to work in Keras:

Now, we will explore Keras using spyder. If you do not know what spyder is, spyder is a python IDE, which has different visual appearance as matlab like, Rstudio like and other types. It is really easy to use for beginners, free in ubuntu;(caution: if you generally open spyder in ubuntu, it may open in python 2.7 version; then download spyder3 to open python3 version). To download spyder, you can similarly write pip3 install spyder3; and later on for opening spyder, write spyder3 in bash console.

Now, assume that you have opened a new empty file in spyder3, and have named it Firstkerasfile.py.

Now, your environment looks like the following:

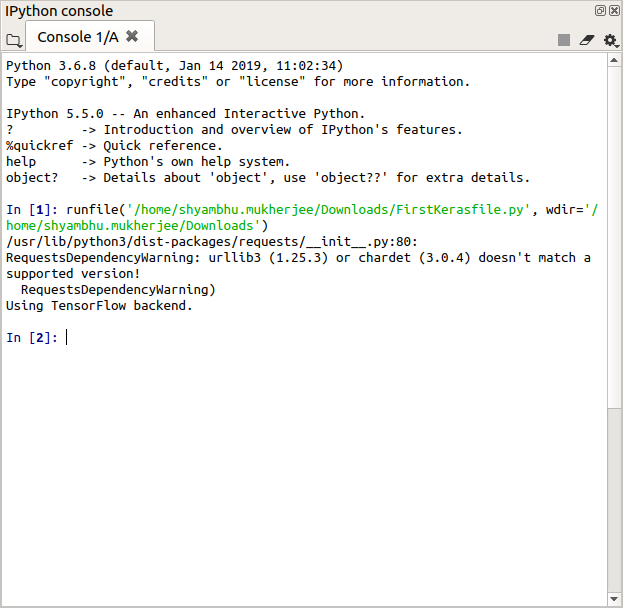

(1) the console:

(2) the code script:

here, as keras uses tensorflow backend, i.e. as it uses the tensorflow library in background, I have imported tensorflow too. Spyder may show the warning

"/usr/lib/python3/dist-packages/requests/__init__.py:80: RequestsDependencyWarning: urllib3 (1.25.3) or chardet (3.0.4) doesn't match a supported version!"

which is a software version related problem and is out of scope for this post. If you want to know about it still now, follow the github link.

Now, proceeding with the keras learning. Upto this was keras import. After this, we will proceed to the exploration of keras datasets, and processes present.

Now, assume that you have opened a new empty file in spyder3, and have named it Firstkerasfile.py.

Now, your environment looks like the following:

(1) the console:

(2) the code script:

here, as keras uses tensorflow backend, i.e. as it uses the tensorflow library in background, I have imported tensorflow too. Spyder may show the warning

"/usr/lib/python3/dist-packages/requests/__init__.py:80: RequestsDependencyWarning: urllib3 (1.25.3) or chardet (3.0.4) doesn't match a supported version!"

which is a software version related problem and is out of scope for this post. If you want to know about it still now, follow the github link.

Now, proceeding with the keras learning. Upto this was keras import. After this, we will proceed to the exploration of keras datasets, and processes present.

Keras datasets and exploration:

Keras comes with 7 inbuilt datasets. The datasets are:

(1) cifar10: cifar10 is a image data, with 10 labels and 50,000 training images and 10,000 images to test the model. The data comes with this training test split inside it. These images are 32 cross 32 pixels in size.

(2) cifar100: cifar100 is a image data, with 100 labels of categorization. There are 50000 training images, while 10000 are testing images. These images are also of 32 cross 32 pixels in size.

Both of these are RGB images; therefore, each image is of shape (3,32,32); and the color is also included as a value in those 3 of the shape.

(3) imdb: This is one of the famous imdb movie datasets. This contains movie reviews and generally used for data exploration, sentiment reviews and other purposes. This data has 25000 datasets. This data is both labelled and also provided with indices on frequency of the data. We will explore this data later; but to see into more details of its exploration; see here.

(4)reuters news data: This is a data on topic classification; containing collections of 11,228 newswires; with over 46 topics. This also contains the words indexed based on frequency.

(5)MNIST hand written digits data: this contains 60,000 images of hand written

10 digits in grey scale, each 28 cross 28 pixels for training, along with 10,000 images for testing data.

(6)MNSIT fashion articles database: Dataset of 60,000 28x28 grayscale images of 10 fashion categories, along with a test set of 10,000 images. This dataset can be used as a drop-in replacement for MNIST.

Follow the official page for more details of this data.

(7)Boston housing price regression model:

Samples contain 13 attributes of houses at different locations around the Boston suburbs around the time of 1970. This data is much used for explaining modelling in regression, regularizations and other concepts.

So, these are about the datasets.

Now, we will apply one by one methods in keras with using these datasets. But before that we have to know the models in keras.

This blog will be updated soon about the models.

(5)MNIST hand written digits data: this contains 60,000 images of hand written

10 digits in grey scale, each 28 cross 28 pixels for training, along with 10,000 images for testing data.

(6)MNSIT fashion articles database: Dataset of 60,000 28x28 grayscale images of 10 fashion categories, along with a test set of 10,000 images. This dataset can be used as a drop-in replacement for MNIST.

Follow the official page for more details of this data.

(7)Boston housing price regression model:

Samples contain 13 attributes of houses at different locations around the Boston suburbs around the time of 1970. This data is much used for explaining modelling in regression, regularizations and other concepts.

So, these are about the datasets.

Now, we will apply one by one methods in keras with using these datasets. But before that we have to know the models in keras.

This blog will be updated soon about the models.

Comments

Post a Comment