Introduction:

We have discussed different aspects of spacy in part 1, part 2 and part 3. Now, up to this point, we have used the pre-trained models. Now, in many cases, you may need to tweak or improve models; enter new categories in the tagger or entity for specific projects or tasks. In this part, we will discuss how to modify the neural network model or train our own models also; as well as different technical issues which arise in these cases.

How does training works:

1. initialize the model using random weights, with nlp.begin_training.

2. predict a bunch of samples using the current model by nlp.update

3. Compare prediction with true labels, calculate change of weight based on those predictions and finally update the weights.

4. finally reiterate from 2.

Refer to the following diagram for a better understanding:

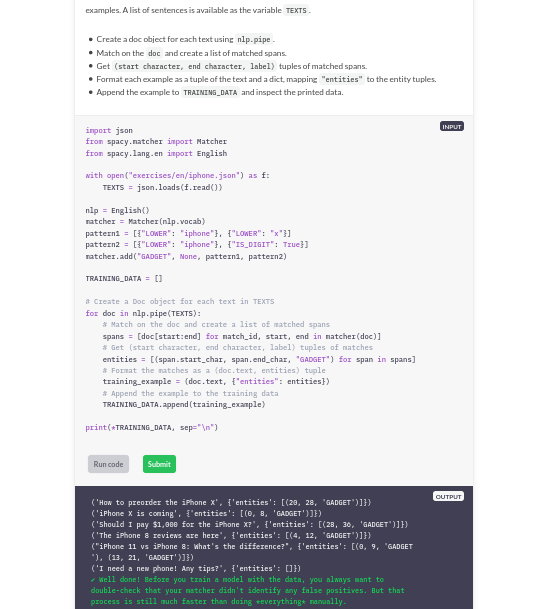

Now in practice, the goal is to create annotated data using prodigy, brat or easily using phraseMatcher feature of spacy. Using phraseMatcher we can quickly label the data with new labels or add the mislabeled data for training and finally, we can use it to update the pos/entity taggers. We will run through a small example of updating a entity tagger to include a gadget label. In this example, we will create training data using phrase matcher and then train using the above mentioned algorithm.

If you note properly, a normal pattern for training data is

(text,{type:[(start_char,end_char,target_label),(start_char2,end_char2,target_label)...]})

Now we can train and update the entity model with this data in example dataset; using the above steps.

We can also train completely empty models similar to the ones already in action from ground up, using the nlp.blank structure.

Using spacy.blank("en"), we can create an empty pipeline object.After that, you can initiate one pipe element, using nlp.create_pipe(task_name) where in task_name you put the name of the pipe element we put to use the readymade pipeline. Finally, we can create the pipe, ready for training, by adding that element using nlp.add_pipe. For understanding these pipeline structuring and what are different pipeline elements and their names, you may want to refer to part 3 where we discussed about spacy pipelines and how they work. Finally also add the label using add_label with the specific element.

Now, we need nlp.begin_training() to start the training. For each iteration, we use the specially formatted training data and create text, and its annotation i.e. the start and end character referred actual labels to train against. We call the spacy's minibatch util, to batch wise split the training data, and create these texts and annotations list.

We call the texts and their respective annotations (which we create from the training data using minibatch utils) in nlp.update function. If you remember the algorithm for training or updating models, you will remember that we update using the comparison.

Here we will call the function nlp.update using nlp.update( texts, annotations, losses = {}) format. To store and observe the losses at each step of training iterations; you need to initiate the empty dict previously and call it after each time the update works. We will discuss how update works in our later posts about diving into spacy's codes.

Now, printing losses will give you fine idea, how the training works and the loss reduces slowly. Finally see the code snippet for what we discussed above:

Problems in training models:

Now, there are a bunch of problems which may occur when you update the model as well as certain things are needed for the quality control of the data you put into models. One of the common problem is:

(1) catastrophic forgetting:

Commonly let's say you are trying to update the existing ner model. Now, what you are doing is you have got 1000 around examples of electronic gadgets and then you update the model with these 1000 odd examples with the label "gadget". Then, it may very well happen that the model will forget to tag GPE or ORG or some other label. This is because the model gets updated in such a way that the model "forgets" or mathematically in the model the importance of the other gadgets get reduced so much so that the model doesn't tag some other classes anymore. See this question answer explaining with an example below:

This is called the catastrophic forgetting. To avoid this, you need to include some or multiple of the already trained labels in each batch of the training data. If you are actually preparing a big training data and then you are just iterating using minibatches, then in that case, you should include similar proportions of data for other labels as well. A more practical vision on this can be gained only by practically doing it. We will discuss this in our future discussion about practical implementation of spacy.

(2) Model can't learn all:

There is another issue while modeling for specific labels is that we need to remember that these are statistical models we are training and there is a limit of specific labels on which we can't train a model. It is not possible for us to create a model with the spacy pipeline which can learn labelling at everything in a human level. That is why when labelling data; we need to remember this fact and not label too specific. Let's look at several examples from the course on what is a good label vs what are bad labels and also guidance about how to choose labels.

So these are several rules which you should remember while labelling your data for training in spacy.

Conclusion:

With this, our basic 4 part spacy exploration ends. I have followed the spacy training course in the official website heavily in drafting these 4 parts, as well as have used shots and completed exercises from it. The final result, which is a concise, smaller series; with almost all the same contents mentioned. I have omitted similarity calculations as spacy uses word2vec as background, which is pretty much dropped in the industries now; as well as the extension setting with spacy; which was complicated and also does not match well with the flow. While these 4 parts are only a basic beginning, these should give you enough background to start a spacy based pipeline in your work/research for analyzing text data.

Note that while spacy is awesome in this way, some of the spacy's models maybe outperformed by bert and transformer ( or their derivative) based models. So for creating cutting edge models and pipelines, you will need to use either bert, transformer or something else which comes after this ( GPT-3 and many more to come).

The biggest usage of spacy is though, not its models, but the structuring of nlp processes, modularized usage and ability to create fast yet smooth processing pipelines. Therefore, even if you are using cutting edge models, fusing spacy's cython driven processes in your pipeline should be given enough thought.

I will write about some of these advanced usage, as such how people are using transformers/gpt-3 with spacy pipelines; how you can integrate spacy with your tensorflow code and others; to finally reach our goal: blazing fast, human like nlp.

Thanks for reading! stay tuned for further articles.

Comments

Post a Comment